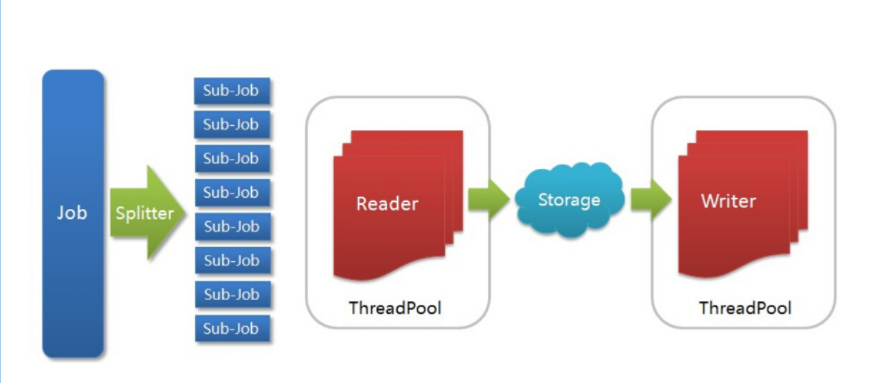

DataX概述

DataX是一个在异构的数据库/文件系统之间高速交换数据的工具,实现了在任意的数据处理系统(RDBMS/Hdfs/Local filesystem)之间的数据交换。

目前成熟的数据导入导出工具比较多,但是一般都只能用于数据导入或者导出,并且只能支持一个或者几个特定类型的数据库。

这样带来的一个问题是,如果我们拥有很多不同类型的数据库/文件系统(Mysql/Oracle/Rac/Hive/Other…),

并且经常需要在它们之间导入导出数据,那么我们可能需要开发/维护/学习使用一批这样的工具(jdbcdump/dbloader /multithread/getmerge+sqlloader/mysqldumper…)。而且以后每增加一种库类型,我们需要的工具数目将线性增 长。(当我们需要将mysql的数据导入oracle的时候,有没有过想从jdbcdump和dbloader上各掰下来一半拼在一起到冲动?)这些工具 有些使用文件中转数据,有些使用管道,不同程度的为数据中转带来额外开销,效率差别很非常大。

很多工具也无法满足ETL任务中常见的需求,比如日期格式转化,特性字符的转化,编码转换。

另外,有些时候,我们希望在一个很短的时间窗口内,将一份数据从一个数据库同时导出到多个不同类型的数据库。

DataX环境要求

1、Linux(CentOS)

2、JDK1.8或以上

3、Python(推荐Python2.6.X)

4、Apache Maven 3.x (Compile DataX)

1、DataX安装部署方案一:直接下载DataX工具包--DataX下载地址

1.1、DataX安装部署方案二:下载DataX源码,自己编译--DataX源码(不推荐)

2.Datax-Web下载

从Git上直接拉源代码进行编译,在项目的根目录下执行如下命令

git clone https://github.com/WeiYe-Jing/datax-web.git

2.1Datax-Web源码下载

直接下载官方源码包

https://github.com/WeiYe-Jing/datax-web/blob/master/doc/datax-web/datax-web-deploy.md[root@master opt]# rz

-rw-r--r--. 1 root root 829372407 6月 26 08:55 datax.tar.gz

-rw-r--r--. 1 root root 217566120 6月 26 10:59 datax-web-2.1.2.tar.gz[root@master opt]# tar xf datax.tar.gz -C /usr/local/big/

[root@master opt]# tar xf datax-web-2.1.2.tar.gz -C /usr/local/big/

[root@master opt]# cd /usr/local/big/datax/[root@master datax]# python ./bin/datax.py ./job/job.json

DataX (DATAX-OPENSOURCE-3.0), From Alibaba !

Copyright (C) 2010-2017, Alibaba Group. All Rights Reserved.

2021-06-26 08:59:38.950 [main] INFO VMInfo - VMInfo# operatingSystem class => sun.management.OperatingSystemImpl

2021-06-26 08:59:38.960 [main] INFO Engine - the machine info =>

osInfo: Oracle Corporation 1.8 25.291-b10

jvmInfo: Linux amd64 3.10.0-1160.el7.x86_64

cpu num: 4

totalPhysicalMemory: -0.00G

freePhysicalMemory: -0.00G

maxFileDescriptorCount: -1

currentOpenFileDescriptorCount: -1

GC Names [PS MarkSweep, PS Scavenge]

MEMORY_NAME | allocation_size | init_size

PS Eden Space | 256.00MB | 256.00MB

Code Cache | 240.00MB | 2.44MB

Compressed Class Space | 1,024.00MB | 0.00MB

PS Survivor Space | 42.50MB | 42.50MB

PS Old Gen | 683.00MB | 683.00MB

Metaspace | -0.00MB | 0.00MB

2021-06-26 08:59:38.981 [main] INFO Engine -

{

"content":[

{

"reader":{

"name":"streamreader",

"parameter":{

"column":[

{

"type":"string",

"value":"DataX"

},

{

"type":"long",

"value":19890604

},

{

"type":"date",

"value":"1989-06-04 00:00:00"

},

{

"type":"bool",

"value":true

},

{

"type":"bytes",

"value":"test"

}

],

"sliceRecordCount":100000

}

},

"writer":{

"name":"streamwriter",

"parameter":{

"encoding":"UTF-8",

"print":false

}

}

}

],

"setting":{

"errorLimit":{

"percentage":0.02,

"record":0

},

"speed":{

"byte":10485760

}

}

}

2021-06-26 08:59:39.000 [main] WARN Engine - prioriy set to 0, because NumberFormatException, the value is: null

2021-06-26 08:59:39.002 [main] INFO PerfTrace - PerfTrace traceId=job_-1, isEnable=false, priority=0

2021-06-26 08:59:39.002 [main] INFO JobContainer - DataX jobContainer starts job.

2021-06-26 08:59:39.005 [main] INFO JobContainer - Set jobId = 0

2021-06-26 08:59:39.024 [job-0] INFO JobContainer - jobContainer starts to do prepare ...

2021-06-26 08:59:39.025 [job-0] INFO JobContainer - DataX Reader.Job [streamreader] do prepare work .

2021-06-26 08:59:39.026 [job-0] INFO JobContainer - DataX Writer.Job [streamwriter] do prepare work .

2021-06-26 08:59:39.026 [job-0] INFO JobContainer - jobContainer starts to do split ...

2021-06-26 08:59:39.027 [job-0] INFO JobContainer - Job set Max-Byte-Speed to 10485760 bytes.

2021-06-26 08:59:39.028 [job-0] INFO JobContainer - DataX Reader.Job [streamreader] splits to [1] tasks.

2021-06-26 08:59:39.029 [job-0] INFO JobContainer - DataX Writer.Job [streamwriter] splits to [1] tasks.

2021-06-26 08:59:39.051 [job-0] INFO JobContainer - jobContainer starts to do schedule ...

2021-06-26 08:59:39.057 [job-0] INFO JobContainer - Scheduler starts [1] taskGroups.

2021-06-26 08:59:39.059 [job-0] INFO JobContainer - Running by standalone Mode.

2021-06-26 08:59:39.069 [taskGroup-0] INFO TaskGroupContainer - taskGroupId=[0] start [1] channels for [1] tasks.

2021-06-26 08:59:39.073 [taskGroup-0] INFO Channel - Channel set byte_speed_limit to -1, No bps activated.

2021-06-26 08:59:39.073 [taskGroup-0] INFO Channel - Channel set record_speed_limit to -1, No tps activated.

2021-06-26 08:59:39.085 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] taskId[0] attemptCount[1] is started

2021-06-26 08:59:39.386 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] taskId[0] is successed, used[303]ms

2021-06-26 08:59:39.387 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] completed it's tasks.

2021-06-26 08:59:49.082 [job-0] INFO StandAloneJobContainerCommunicator - Total 100000 records, 2600000 bytes | Speed 253.91KB/s, 10000 records/s | Error 0 records, 0 bytes | All Task WaitWriterTime 0.042s | All Task WaitReaderTime 0.067s | Percentage 100.00%

2021-06-26 08:59:49.082 [job-0] INFO AbstractScheduler - Scheduler accomplished all tasks.

2021-06-26 08:59:49.084 [job-0] INFO JobContainer - DataX Writer.Job [streamwriter] do post work.

2021-06-26 08:59:49.085 [job-0] INFO JobContainer - DataX Reader.Job [streamreader] do post work.

2021-06-26 08:59:49.086 [job-0] INFO JobContainer - DataX jobId [0] completed successfully.

2021-06-26 08:59:49.088 [job-0] INFO HookInvoker - No hook invoked, because base dir not exists or is a file: /usr/local/big/datax/hook

2021-06-26 08:59:49.094 [job-0] INFO JobContainer -

[total cpu info] =>

averageCpu | maxDeltaCpu | minDeltaCpu

-1.00% | -1.00% | -1.00%

[total gc info] =>

NAME | totalGCCount | maxDeltaGCCount | minDeltaGCCount | totalGCTime | maxDeltaGCTime | minDeltaGCTime

PS MarkSweep | 0 | 0 | 0 | 0.000s | 0.000s | 0.000s

PS Scavenge | 0 | 0 | 0 | 0.000s | 0.000s | 0.000s

2021-06-26 08:59:49.095 [job-0] INFO JobContainer - PerfTrace not enable!

2021-06-26 08:59:49.097 [job-0] INFO StandAloneJobContainerCommunicator - Total 100000 records, 2600000 bytes | Speed 253.91KB/s, 10000 records/s | Error 0 records, 0 bytes | All Task WaitWriterTime 0.042s | All Task WaitReaderTime 0.067s | Percentage 100.00%

2021-06-26 08:59:49.099 [job-0] INFO JobContainer -

任务启动时刻 : 2021-06-26 08:59:39

任务结束时刻 : 2021-06-26 08:59:49

任务总计耗时 : 10s

任务平均流量 : 253.91KB/s

记录写入速度 : 10000rec/s

读出记录总数 : 100000

读写失败总数 : 0[root@master opt]# git clone git@github.com:alibaba/DataX.git[root@master opt]# cd {DataX_source_code_home}

[root@master opt]# mvn -U clean package assembly:assembly -Dmaven.test.skip=true[INFO] BUILD SUCCESS

[INFO] -----------------------------------------------------------------

[INFO] Total time: 08:59 min

[INFO] Finished at: 2021-06-26T08:59:49+08:00

[INFO] Final Memory: 133M/960M

[INFO] -----------------------------------------------------------------结构如下

[root@master opt]# cd {DataX_source_code_home}

[root@master opt]# ls ./target/datax/datax/

bin conf job lib log log_perf plugin script tmp可以通过命令查看配置模板: python datax.py -r {YOUR_READER} -w {YOUR_WRITER}

DataX (UNKNOWN_DATAX_VERSION), From Alibaba !

Copyright (C) 2010-2015, Alibaba Group. All Rights Reserved.

Please refer to the streamreader document:

https://github.com/alibaba/DataX/blob/master/streamreader/doc/streamreader.md

Please refer to the streamwriter document:

https://github.com/alibaba/DataX/blob/master/streamwriter/doc/streamwriter.md

Please save the following configuration as a json file and use

python {DATAX_HOME}/bin/datax.py {JSON_FILE_NAME}.json

to run the job.

{

"job": {

"content": [

{

"reader": {

"name": "streamreader",

"parameter": {

"column": [],

"sliceRecordCount": ""

}

},

"writer": {

"name": "streamwriter",

"parameter": {

"encoding": "",

"print": true

}

}

}

],

"setting": {

"speed": {

"channel": ""

}

}

}

}#stream2stream.json

{

"job": {

"content": [

{

"reader": {

"name": "streamreader",

"parameter": {

"sliceRecordCount": 10,

"column": [

{

"type": "long",

"value": "10"

},

{

"type": "string",

"value": "hello,你好,世界-DataX"

}

]

}

},

"writer": {

"name": "streamwriter",

"parameter": {

"encoding": "UTF-8",

"print": true

}

}

}

],

"setting": {

"speed": {

"channel": 5

}

}

}

}[root@master opt]# cd {YOUR_DATAX_DIR_BIN}

[root@master bin]# python datax.py ./stream2stream.json...

2021-06-26 09:19:49.099 [job-0] INFO JobContainer -

任务启动时刻 : 2021-06-26 09:19:39

任务结束时刻 : 2021-06-26 09:19:49

任务总计耗时 : 10s

任务平均流量 : 253.91KB/s

记录写入速度 : 10000rec/s

读出记录总数 : 100000

读写失败总数 : 01、进入源码目录

[root@master big]# cd datax-web-2.1.2/

[root@master datax-web-2.1.2]# ll

总用量 28

drwxrwxrwx. 3 root root 104 6月 23 2020 bin

drwxr-xr-x. 2 root root 77 6月 26 11:00 packages

-rwxrwxrwx. 1 root root 13455 6月 23 2020 README.md

-rwxrwxrwx. 1 root root 9177 6月 23 2020 userGuid.md2、执行一键安装脚本

进入解压后的目录,找到bin目录下面的install.sh文件,如果选择交互式的安装,则直接执行

## 前端交互安装执行如下

[root@master datax-web-2.1.2]# ./bin/install.sh

## 静默安装执行如下

[root@master datax-web-2.1.2]# ./bin/install.sh --force

## 前端交互安装详情

2021-06-26 11:00:56.126 [INFO] (2602) Creating directory: [/usr/local/big/datax-web-2.1.2/bin/../modules].

2021-06-26 11:00:56.136 [INFO] (2602) ####### Start To Uncompress Packages ######

2021-06-26 11:00:56.142 [INFO] (2602) Uncompressing....

Do you want to decompress this package: [datax-admin_2.1.2_1.tar.gz]? (Y/N)y

2021-06-26 11:01:14.345 [INFO] (2602) Uncompress package: [datax-admin_2.1.2_1.tar.gz] to modules directory

Do you want to decompress this package: [datax-executor_2.1.2_1.tar.gz]? (Y/N)y

2021-06-26 11:01:25.314 [INFO] (2602) Uncompress package: [datax-executor_2.1.2_1.tar.gz] to modules directory

2021-06-26 11:01:25.652 [INFO] (2602) ####### Finish To Umcompress Packages ######

Scan modules directory: [/usr/local/big/datax-web-2.1.2/bin/../modules] to find server under dataxweb

2021-06-26 11:01:25.661 [INFO] (2602) ####### Start To Install Modules ######

2021-06-26 11:01:25.665 [INFO] (2602) Module servers could be installed:

[datax-admin] [datax-executor]

Do you want to confiugre and install [datax-admin]? (Y/N)y

2021-06-26 11:01:28.265 [INFO] (2602) Install module server: [datax-admin]

Start to make directory

2021-06-26 11:01:28.323 [INFO] (2884) Start to build directory

2021-06-26 11:01:28.328 [INFO] (2884) Creating directory: [/usr/local/big/datax-web-2.1.2/modules/datax-admin/bin/../logs].

2021-06-26 11:01:28.435 [INFO] (2884) Directory or file: [/usr/local/big/datax-web-2.1.2/modules/datax-admin/bin/../conf] has bee

2021-06-26 11:01:28.441 [INFO] (2884) Creating directory: [/usr/local/big/datax-web-2.1.2/modules/datax-admin/bin/../data].

end to make directory

Start to initalize database

Do you want to confiugre and install [datax-executor]? (Y/N)y

2021-06-26 11:01:30.552 [INFO] (2602) Install module server: [datax-executor]

2021-06-26 11:01:30.619 [INFO] (2944) Start to build directory

2021-06-26 11:01:30.625 [INFO] (2944) Creating directory: [/usr/local/big/datax-web-2.1.2/modules/datax-executor/bin/../logs].

2021-06-26 11:01:30.718 [INFO] (2944) Directory or file: [/usr/local/big/datax-web-2.1.2/modules/datax-executor/bin/../conf] has

2021-06-26 11:01:30.723 [INFO] (2944) Creating directory: [/usr/local/big/datax-web-2.1.2/modules/datax-executor/bin/../data].

2021-06-26 11:01:30.816 [INFO] (2944) Creating directory: [/usr/local/big/datax-web-2.1.2/modules/datax-executor/bin/../json].

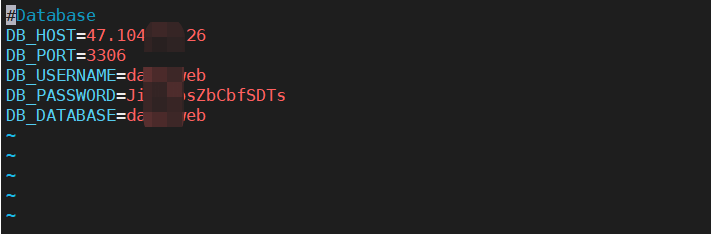

2021-06-26 11:01:30.889 [INFO] (2602) ####### Finish To Install Modules ######3、配置MySQL数据库连接

[root@master datax-web-2.1.2]# vim ./modules/datax-admin/conf/bootstrap.properties

#Database

DB_HOST=47.1*4.*5.***

DB_PORT=3306

DB_USERNAME=data-web

DB_PASSWORD=JiLZXbsZbCbs

DB_DATABASE=data-web

经过几年积累,DataX目前已经有了比较全面的插件体系,主流的RDBMS数据库、NOSQL、大数据计算系统都已经接入。DataX目前支持数据如

提示:如遇链接失效,请在评论区留言反馈